External Continual Learner (ECL): Scaling In-Context Learning without forgetting and RAG. Imagine an LLM could continually learn, without fine-tuning, without PEFT Adapters.

Scaling Continual Learning with an External Continual Learner (ECL)

Continual learning (CL) aims to allow models to incrementally learn new tasks, but traditional approaches often suffer from a phenomenon called catastrophic forgetting (CF). This means that as the model learns new tasks, it tends to forget what it learned in previous tasks. While in-context learning (ICL) offers a way to leverage the knowledge within large language models (LLMs) without updating parameters, it is difficult to scale because it requires adding training examples to prompts for each new class, which can lead to excessively long prompts.

The challenge is to enable LLMs to learn incrementally without forgetting, and without relying on traditional fine-tuning methods. This is where the External Continual Learner (ECL) comes into play.

What is an External Continual Learner (ECL)?

An External Continual Learner (ECL) is a novel approach designed to work with LLMs, specifically by assisting in-context learning. Instead of directly updating the LLM’s parameters, which can cause CF, the ECL acts as an external module that incrementally learns to select the most relevant information for each new input instance. This significantly improves scalability and efficiency.

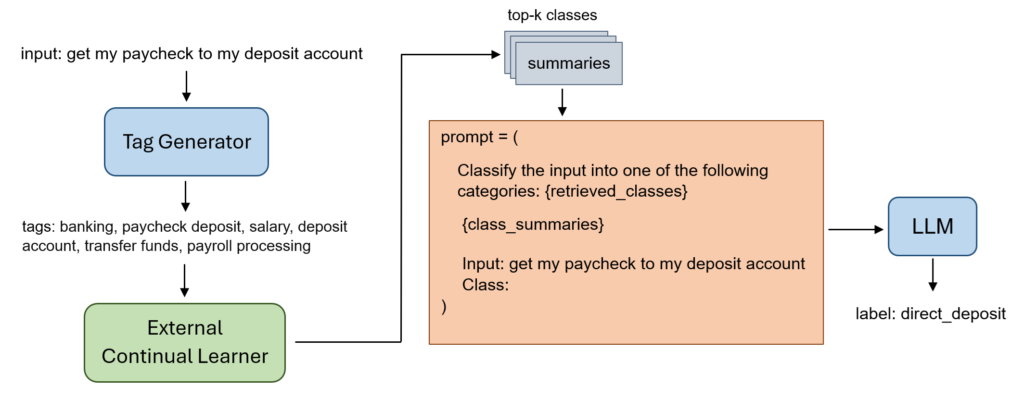

The core idea behind the External Continual Learner (ECL) is to reduce the number of candidate classes that need to be included in the ICL prompt. Instead of including examples from all classes encountered so far, the ECL intelligently pre-selects a small set of ‘k’ classes that are most likely to be relevant.

Here’s how it works:

Tag Generation: The input text is processed by the LLM to generate a list of descriptive tags. These tags capture the essential semantics of the input.

Gaussian Class Representation: Each class in the dataset is represented by a Gaussian distribution of its tag embeddings. This helps in distinguishing different classes.

Mahalanobis Distance Scoring: The ECL calculates the Mahalanobis distance between the input’s tag embeddings and each class distribution. This identifies the top ‘k’ most similar classes.

Why Use an External Continual Learner (ECL)?

The ECL addresses several critical issues in continual learning:

Scalability: It prevents prompt lengths from becoming too long by only including a small subset of relevant classes in the in-context prompt.

Performance: It maintains high performance by reducing irrelevant information in prompts that can degrade results.

Catastrophic Forgetting: The ECL learns incrementally without parameter updates, avoiding CF.

Inter-task Class Separation (ICS): By using Gaussian class representation, the ECL implicitly addresses the challenge of separating new classes from older ones.

InCA: Leveraging the Power of an ECL for In-Context Learning

InCA, or In-context Continual Learning Assisted by an External Continual Learner, is a method that integrates an ECL with ICL. It uses the ECL to pre-select classes and then constructs a more focused in-context learning prompt. InCA significantly outperforms traditional CL baselines and makes in-context CIL (class-incremental learning) both feasible and efficient.

How InCA Works with the External Continual Learner (ECL)

InCA uses the External Continual Learner (ECL) in the following way:

Input text goes through the LLM to generate semantic tags.

The ECL uses the generated tags to identify the ‘k’ most likely classes.

Class summaries for the top ‘k’ classes are used to construct the final in-context prompt for the LLM, leading to prediction.

InCA focuses on utilizing a powerful LLM but avoiding fine-tuning, and this is where the ECL makes it achievable. The method learns incrementally by only updating class means and a shared covariance matrix in the ECL module, and the LLM’s parameters are never altered. This makes it robust and efficient, and provides the added value of removing the need for task-ids.

The External Continual Learner (ECL) presents a novel and scalable solution to the challenges of continual learning. By integrating an ECL with ICL, methods like InCA demonstrate significant improvements in both accuracy and scalability, making it a promising path for the future of CL. By reducing prompt size and filtering relevant information, InCA overcomes the limitations of traditional in-context learning in class-incremental scenarios.

This approach balances scalability and performance, making it ideal for continual learning scenarios. The External Continual Learner (ECL) provides an essential component in making in-context continual learning truly practical.

Please Complete the form below and Our Tech Leads and Business Analysts contact you to discuss your project. Your information will be kept confidential.